This is the second blog in a series outlining how we approach AI for automarking, and also introducing our products in this area. You may also be interested in this blog, which explains the principles that underpin our automarking work, and how we’ve conducted the research that led us to these launches.

The majority of primary schools in England use some form of externally-created, standardised assessment as part of how they review their progress against the curriculum. There’s a lot of sense to doing so: standardised assessments give you an externally benchmarked analysis of how your students are performing, allowing you to spot gaps and plan interventions. But the approach has its drawbacks, including the huge amount of marking involved.

Smartgrade has grown rapidly since we launched in 2020, and we’re now offering standardised assessments to around 1,000 primaries (as well as hundreds of secondaries). We’ve already introduced some innovations that can reduce workload: for example, we’ve had online HeadStart Reading and GPS assessments for some time, where the multiple-choice elements are automarked and the free text questions are marked by the teacher using a neat user interface that makes it quicker than marking books.

However, the inescapable reality until now has been that some teacher marking was still needed. But today we’re delighted to announce that this is no longer the case! Following an extensive period of testing and validation we’re now making AI-powered automarking available for all year 1 to 5 termly online HeadStart Reading and GPS assessments. That means your students can take the tests on a tablet or computer, and you can leave Smartgrade to do ALL the marking. In recent tests, we found that our AI automarking achieved 97% accuracy when marking those assessments - better than the average performance of a teacher when marking the same assessments.

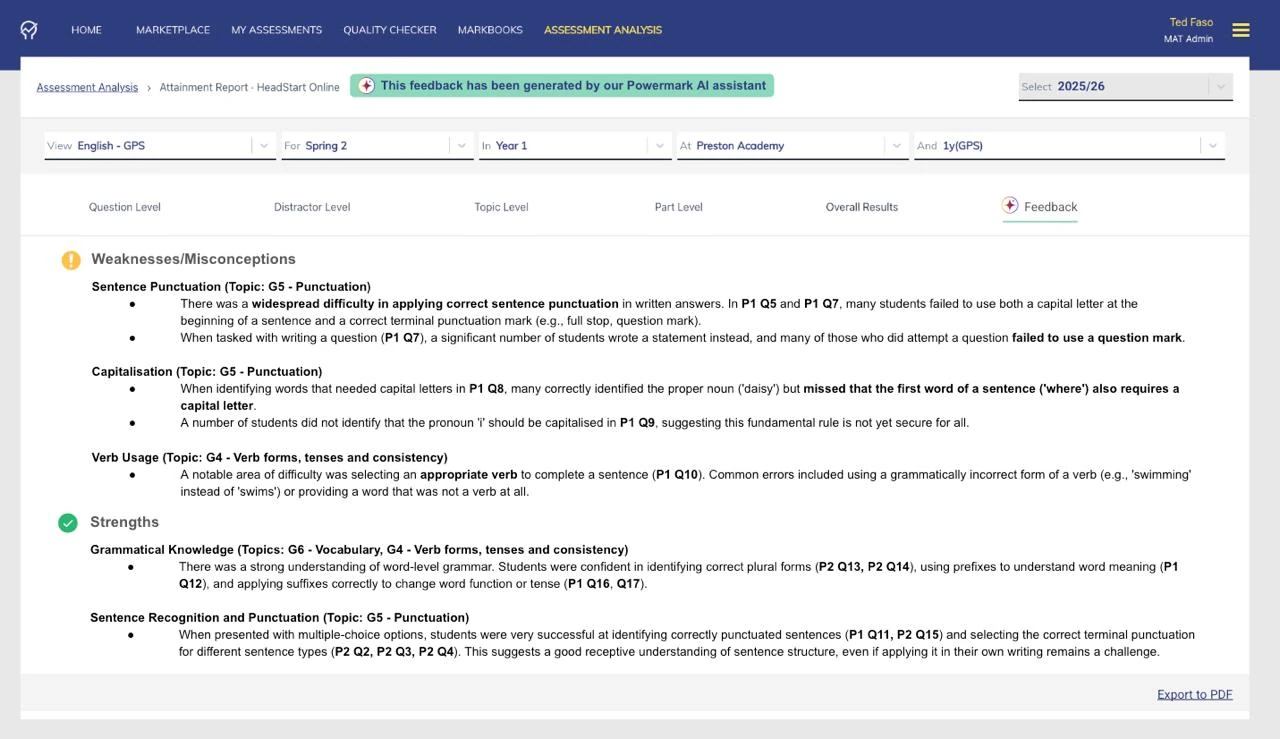

Marking insights in feedback reports

In developing this feature we’ve been conscious that marking isn’t just about scoring a student - teachers also pick up valuable (if unstructured) insights into student performance through the marking process. For that reason, all customers using our automarking engine also get access to class, year and even MAT-level feedback reports that highlight student weaknesses and misconceptions, as well as notable strengths.

We have also put a lot of effort into mitigating the fact that, while our automarking is now on average more reliable than a teacher, it can still make mistakes. After Smartgrade automarks an assessment, teachers view moderation screens where they see the answers AI has given, and can edit them if they see any that are incorrect, and submit a reason for their mark change which we use to help us improve. During our pilots, we found that the moderation process takes between 10 and 30% of the time that traditional marking would take - and we’re making adjustments to the product that will further reduce the effort involved.

“My teachers are skipping along the corridors!”

Feedback from pilot users has been phenomenal. Angela Sweeting from Oasis Aspinal commented that our AI automarking “massively reduced workload and the teachers were surprised at how accurate it was.” She went on to say that “The feedback reports are also absolutely brilliant - we love them.” But perhaps our favourite comment from Ang was that “My teachers love it so much that they’re skipping along the corridors!”

If you like what you’ve heard, book in a demo with our team to see automarking of online assessments in action and discuss how this could work for your school. And if you’re interested, but your school isn’t quite ready for online assessments, fear not, an offline version (where you quickly scan in handwritten papers) is in the works too!

Register your interest in this here.

Sign up to our newsletter

Join our mailing list for the latest news and product updates.